How do humans perceive robots?

Abstract: From a pure technical point of view, we use robots or machines daily that might be considered as such. Indeed, cars, automatic assembling lines, washing machines, printers and cash dispensers are robotic entities that surround us and simplify our lives. However, many people believe that a robot is different from these previous examples even though a robot, by definition, is an intelligent system that is able to interact with his environment autonomously. Clearly, there is a subjective barrier in people’s minds: on one side, we have fascinating and intriguing robots and on the other side, we have banal automata. One answer to the title’s question is to try to understand what creates and supports this barrier of perception.

About the difficulty of defining robots

Before developing the topic of how humans perceive robots, we first must agree about the central object of this discussion. Robots are particular entities: not plant, animal nor human. So what are they? In the 50’s a famous roboticist confessed that he is unable to characterize robots but, if something is presented to him, he can only tell if it isn’t a robot. Following that, at first glance, it seems to be useless to try establishing a robot’s definition. Nevertheless, in folklore, robots are magic and somehow limitless systems that can do everything you want, solve any of your problems, etc. From my own experience when discussing robots and my research with a nontechnical audience, I can feel some fear and a lot of curiosity. Why is there this behavior toward roboticians? Why do normal people think that we are achieving impossible systems? Why do they think that we are too futuristic? This situation is recurrent and even if I explain that we are only working with machines made of metal and endowed with advanced software, I still am questioned. My answers are accepted politely and my interlocutors accuse me of an excess of humility. Robots may inspire fear and fascination. Perhaps fear and fascination are the consequences of the two faces of our technology, the good one that helps in wellbeing, and the bad one that creates joblessness and supports war.

The fact is that up to a certain complexity, robots are no more advanced than washing machines: they obey to preprogrammed sequences for acting on the world and reacting to its changes. Considering the robots as such is reductionist but not far from the truth in many cases.

In my opinion, the title’s question has as many valid answers as we have numbers of different robots and different observers. In this submission, I’ll try to depict my understandings of the relationships (real or hypothetic) that humans and robots may have. In some cases, I’ll rely on factual proofs directly inspired by the current research and other cases I’ll share working or prospective hypotheses that have not been verified yet regarding the mental human processes that shape our representations of them.

Robotics and the folklore

We are shaped and formed by our cultural background and our daily life acquisitions and the imprints of such acquisitions can be deeper than one realizes. The impact of the current technological advances is not yet clear but palpable. As a matter of fact, one can see how young people are very comfortable with mobile phones and other PDA’s. Concerning robotics, the scheme is somehow different: we don’t have yet large-scale deployments of robots and the robotics by essence is not a new concept. In my opinion, a good way to understand how the robotics is viewed by our societies is to read its history. This must be done from ethnographical, social and economical perspectives. For sure robots didn’t exist in prehistory, however we can assume easily that the concept of ‘superhuman’, populated through legends, has influenced and shaped our modern vision of robots.

1. The myth era: the artificial superhuman

Human history is full of myths and stories about supermen; namely, extraordinary creatures with incredible capabilities. The idea of creating machines that are human-like shaped and having human behavior is old. Stories about mythical artificial people behaving like real ones were reported in the past. The Greek legend with Ulysses and his Talos or the Jewish story of the clay golems for instance, formed the beliefs in extraordinary entities completely created by humans to use as slaves. A step closer was the first realization of actual and effective mechanical based systems. Ctesibius and after, Hero of Alexandria created numerous mechanical systems powered by air pressure and water. Su Song, the Chinese, created a clock tower with figurines chiming the hours. Arabs through Al-Jazari, developed the first humanoids that play music. Leonardo Da Vinci and then Vaucanson built human sized characters as well as the first animats (a duck which flap wings). All these creations were a demonstration of power: each nation pushed forward the design of advanced and complex systems.

More recently, ‘Frankestein,’ the US cinematographic success, was projected on screens. The central character was created through chemistry and alchemy and brought to life by a scientist. Practically at the same time, the word robot was introduced for the first time by the Czech writer Čapek in his famous play RUR (Rossum’s Universal Robots). Čapek referred to a factory producing human-like autonomous machines. Although from an etymological perspective the concept of robot and robotics started with Čapek, the concept of human-like machines executing human aims and commands without complaining is much older.

The other mythical aspect of early robotics is dealing with Asimov’s laws. These laws sound like the Ten Commandments in the Bible: ‘the robot may not injure…, the robot must obey…, the robot must protect…’ and are a quasi-theological statement on robotics. The parallel with the Bible made here must be considered carefully. Western’s vision of robots differs from Eastern’s one, as we will see later: Japanese typically characterize human-robots interactions with concepts of comfort, while westerns consider fear.

Above the subjectivity and the ineffectiveness of the pre-robotics era, one can notice that creation scheme started with mechanical systems and moved to the ultimate stage with a quasi human with a soul and a mind. Secondly, the main goal behind each creation is the demonstration of a nation’s power and advances. Indeed, the main purpose for such facts is to maintain supremacy over laypeople: he who masters such creations has the power for both internal and external necessities.

2. The industrial era: the hard worker

After Čapek and Asimov’s novels, the rationalization of production means and tools raised a crucial issue for developed countries economies. The Second World War increased the need and robotics became a decisive war tool: the development of nuclear weapons required dexterous and effective manipulators to handle toxic and hazardous material. At that time, only machines directly controlled by humans were able to fulfill these requirements. Anthropomorphic arms with human intelligence and motor controls were developed in Los Alamos. Goertz presented his tele-operation system, where a master (a human) controlled a slave (an anthropomorphic robotics arm) to handle radioactive material. With effectiveness being the core target, engineers of the Argonne National Lab admitted that the best controller is a human being.

This co-operative configuration remained valid till the 60’s when a new configuration with autonomous (without direct human control) arms was introduced. The industrial Unimate was presented as the solution to increase production and products’ quality. These machines could perform very simple pick and place tasks, and perhaps for marketing reasons, or for their anthropomorphism, they were named robots. People accepted the fact but with some apprehension about their jobs (e.g. the equation One robot=One lost job was very popular).

3. The intelligence era: the autonomous machine

In the mid 50’s a group of researchers launched the idea of ‘Artificial Intelligence’. With the advance of the new computing capabilities, AI proved promising in terms of solving complex problems that even humans cannot do. After ‘the chess player’, ‘the sales man’ and other ‘health diagnosis’ systems, researches were pushed to more visible and more impacting demonstrators. In this perspective, the Stanford Robotics Institute introduced a big change: H. Moravec, and his HERBERT robot, liberated the Unimate arm allowing it to move around autonomously. This created the platform to empirically test artificial intelligence theories. The idea of a system moving autonomously by processing sensing data, reasoning about its environment, and generating motion controls was complex. HERBERT came with many problems to solve: locomotion, sensing and decision-making, regardless HERBERT was copied all over the world. The third generation of robots had arrived.

Moreover, mobile robotics, together with additional technological advances, made industrial robotics more capable of performing complex manipulation tasks and of solving difficult navigation problems. This capability was increased when computers and computer sciences were populated with cheap and small PCs. It opened promising new doors to artificial intelligence, automatic control, sensing capabilities, signal processing, locomotion, etc. for handling unexpected changes and versatile environments. The mobile robotics came up with new ways of thinking machines and new, and now popular, applications such as surgical robotics, planetary exploration (Mars-Explorer mission), search and rescue robots, etc.

What is intriguing in HERBERT and mobile robotics more generally, is the fact that a machine with wheels, far from being anthropomorphic (except the visual sensing system), was called a robot. Was it for more visibility and more impact to get funds or was it for functional anthropomorphism? Historians of robotics may answer this question one day.

4. The humanoid era: mirroring ourselves

As for the pre-robotics era, robotics researches are directed and strongly supported toward the so-called bio-inspired robotics: animats in general and humanoids in particular. The main hypothesis of bio-inspiration is that nature performed well the selection and only the most suitable organisms (those able to cope with their environments) remained. For engineers, the bio-inspiration simplifies the work and the design effort is very low: they have a free inspiration source. The first inspiration was tested in the 50’s with the PERCEPTRON: an artificial neural network which architecture was inspired by real neural system and it was able to recognize alphanumeric characters.

In recent years many animats have been built including fishes, rats, dogs, monkeys, etc. In addition to solving some functional problems (locomotion, embedded energy, specific sensing, etc), bio-inspired robotics spurred many of other research areas, particularly material sciences, nanotechnologies, and advancing computing capabilities with embedded and massive parallel computers. Needless to say that bio-inspiration is a serious contributor and a major factor in reinforcing the myth that robotic researchers are able to reproduce nature.

One particular theme within bio-inspired robotics is concerned with humanoid robotics; it is unique not only because it is the most complex but because it is the most impacting. This trend started in the early 70’s in Japan and still employs a huge number of people and labs. The guiding principles of this effort are not yet clear (bio-inspiration or the ultimate robot?) neither are the motivations behind building humanoid robots.

The main justification one hears centers around the idea that ‘if shaped like a human the robot will be more accepted than a wheeled robot ‘. This is not exactly true, as we will see later in the last section. The other tendency (which is by the way more coherent) considers the robot as a platform to test theories and models about human physiology, cognitive and neuronal functions. In other words, this humanoid robotics is dealing first with decrypting the human complexity with the hope to reproduce it (prosthesis for instance) in the near future.

As one might notice, here the ultimate goal is the artificial human. A kind of mirror allowing us to understand ourselves before moving to more therapeutical considerations.

New trends of robotics

After reviewing very briefly the story of robotics and pre-robotics one cannot find any specific topic related to question we are supposed to answer. In fact the question is new (relatively) to robotics. The first ones to tackle it are people working in tele-operation. Indeed, interactions with remote robots pose the problem of perceiving the remote machine and its effects on operations performances. The first studies in this domain were initiated with the help of psychologists and specialists of human factors. These studies revealed a new dichotomy: robots can be seen either as partners or as tools.

To simplify, we can classify the first stream as the one materialized by humanoid robotics. The second one is more related to tele-operation. However both have a common ground: ‘the relationship with robots’ and obviously, the way humans perceive and think about robots.

The interaction studies began from the perspective of humanoid robots in homes and the question of human-robots relationships became fundamental. As usual, engineers thought about deriving guidelines to build the robot companion that can help elderly and disabled people in their daily life tasks. My research background is based on the idea that how humans perceive robots is not just a philosophical question. One must understand what mechanisms support human robot interactions. Unfortunately, there is no proved method nor accepted metrics allowing measuring the quality of the relationships between humans and robot companions. Social and emotional robotics is the new research field investigating the previous issue. The key point is to be able to measure the human acceptability of robots. From the methodological point of view, one should start by understanding and modeling the processes underlying robots’ perception and then quantify the acceptability using these models. This issue is more than conceptual and it has huge economical consequences: how to build robots that are accepted as such (or more) by users.

A suggestion: a unified formalism to assess and use the uncanny valley.

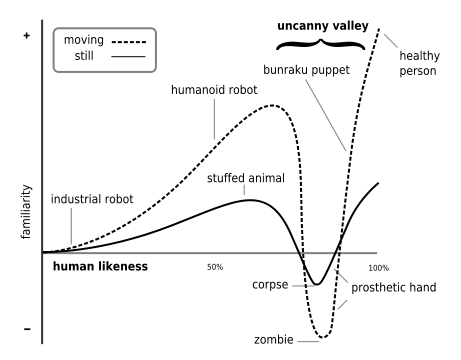

The uncanny valley is the holy grail of robotics these days. Mori was the first to introduce the concept in the 70’s. He proposed an objective measurement of ‘how human perceive robots’. Indeed, Mori expressed the comfort one has in the presence of robots, artificial, or non-conventional creatures as a function of the anthropomorphism of these later. This function has a characteristic shape: the curve shows an increasing comfort until a fall when creatures are close to human-shape and again an increase when the entity is considered to be human. The previous phenomenon can be summarized by the following formula: “too far no fear, too close too far”. When the ‘creatures’ are too different from us, we feel confident (a toy robot for instance). On the contrary, when the ‘creatures’ look like us (the GEMINI robot), our natural tendency is to be afraid and to “move” far from it. In other words, one feels comfortable with animal-shaped robots and humans, but this comfort decreases when he or she are in the presence of robots very similar to humans.

Why does such a phenomenon exist and what are the mechanisms leading to these reactions? Even if one can intuitively accept and observe the fact, the core question is still under debate and no clear proofs of its existence neither its inexistence have been established. It is interesting to notice that Mori’s hypothesis generated a huge number of researches in many domains, not only in robotics but also in psychology, cognition and neurosciences.

5. The empathy

There are many different definitions of empathy. Let us consider this one: we observe reality through someone else’s eyes. This definition can be also seen as the known perspective, one of the main cognitive components of empathy and thus inter-humans’ social relations. This capability allows us to feel the other (including his mental state) and thus to be able to predict his behaviors.

Following that, it is difficult to figure out reactions and behaviors of degraded living beings like animals. Likewise, when an adult is with a baby or an aged person, he or she experience similar difficulties. If we are facing our clone (the asymptotic other), we understand him and his behavior conforms to our expectations. Moreover, when one is in the presence of a robot (the GEMINI for instance), noticeable rejections can be observed very quickly (my own experience!). Why this fact? One plausible hypothesis is that the perspective taking process does not take place. As robots and humans belong to two different sensory-motor spaces, some critical perceptual features are missing or distorted.

6. Theory of mind (a novice point of view)

The theory of mind is a way to model the prediction process leading someone to understand the other’s intentions and actions. If we consider the predictive model under the light of what was discussed for empathy, one can argue that adults are more tolerant to perceptual prediction errors when facing babies, elderly than other adults and realistic humanoid robots. Somehow the errors are weighted function of the vis à vis.

7. Mirror neurons

The last part is the physiology supporting the previous two concepts. There are some physiological evidences about the existence of a biological hardware where empathy or similar process takes place (or at least is active). A large literature exists about this topic and how these neurons are related to empathy and the theory of mind. What is important to keep in mind here is that we have access (not as much as needed) to the substrate and we can derive objective measurements from it.

These three components can be combined both to explain the uncanny valley and to help to understand the human perception of robots. This challenging task could be addressed following the three axes:

- Exploring in depth the external features that can be extracted following the empathy theory: detectable emotions (facial expressions, gestures and speech for instance) modeling, detection and recognition,

- Build strong prediction models using (1) and relying on the theory of mind,

- Assess and measure objectively the (1) and (2) through the mirror neurons.

In conclusion

When I started thinking about the topic of this contribution (some years ago), the main question I had in mind is the following: Why our own behavior changes when facing novelty or unexpected behaviors, not necessarily with robots but other human beings. The natural answer that came in mind is that if we are tolerant in terms of accepting other cultures, we can easily understand (not necessarily accept) any behavior we are not used to. If we are not tolerant, we should be shocked. The reason for this could be any incoherence between our expectations and our actual perception. Somehow we modulate errors between our expectations/predictions and our perception: the more the vis-à-vis is closer to us (or assumed to be so), the less we tolerate prediction errors. With robots, we should have the same (if not even more) consideration and tolerance.

The self-conclusion of this contribution is obvious: before answering the title’s question, we have first to answer the question of ‘how humans perceive humans’.

Un Commento